The “Hard Problem” of Consciousness

Recently we have been working nearly every day with ChatGPT, DALL-E, Midjourney and Bard, and this has led us to question whether these GenerativeAI models are intelligent.

The first problem you come across when trying to answer this question, is that no one agrees on what intelligence is. From that perspective, the question then becomes unanswerable. However, this is a bit of a cop out. A better yard stick of intelligence may be, “I can’t define intelligence, but I know what it looks like when I see it.” Using that criteria, we think that most people would agree that Artificial Narrow Intelligence (ANI) has been achieved. Artificial Narrow Intelligence (ANI), also known as Weak AI, refers to AI systems designed to perform a specific task or a limited range of tasks. Unlike Artificial General Intelligence (AGI) or Strong AI, ANI lacks the ability to apply its intelligence broadly across a wide range of contexts. Examples of ANI include:

- Language Models like GPT-4 & PaLM 2: These are specialized in processing and generating human language. They can answer questions, write essays, create poems, translate languages, and more, but their capabilities are confined to language-related tasks.

- Chess and Go Playing Programs like Deep Blue and AlphaGo: These AI systems are designed to play specific board games at a high level. For instance, Deep Blue (Figure 2) defeated world chess champion Garry Kasparov in 1997, and AlphaGo made headlines for beating top human Go players. Their intelligence is highly specialized for the strategies and intricacies of the respective games they are designed to play.

- Self-Driving Cars: Autonomous vehicles use AI to interpret sensor data, make decisions, and navigate roads safely. This involves real-time processing of a vast amount of data, including recognizing objects, predicting the behavior of other road users, and adhering to traffic rules.

- Personal Assistants like Siri, Alexa, and Google Assistant: These AI systems can perform a range of tasks, such as setting reminders, playing music, providing weather updates, and controlling smart home devices. Their abilities are tailored to specific user commands and interactions.

- Recommendation Systems: Used by platforms like Netflix, Amazon, Spotify, and YouTube, these AI systems analyze user data to recommend products, movies, songs, or videos. Their intelligence is focused on understanding user preferences and suggesting relevant content.

- Medical Diagnosis Tools: Some AI systems assist in diagnosing diseases by analyzing medical images, such as X-rays or MRI scans. They are trained to detect patterns indicative of specific medical conditions.

- Fraud Detection Systems: Used in the banking and financial sectors, these AI applications monitor transactions to detect unusual patterns that might indicate fraudulent activity.

- Manufacturing and Industrial Robots: These AI-driven machines are programmed to perform specific tasks such as assembling products, packaging, or painting with high precision and efficiency.

- Speech Recognition Systems: These are specialized in converting spoken words into text and are used in various applications like voice-controlled gadgets, dictation software, and automated customer support systems.

If we characterize intelligence as the ability to learn, understand, reason, make decisions, and adapt to new situations, then current ANIs clear this hurdle.

The Intelligence Evolutionary Tree

Biological

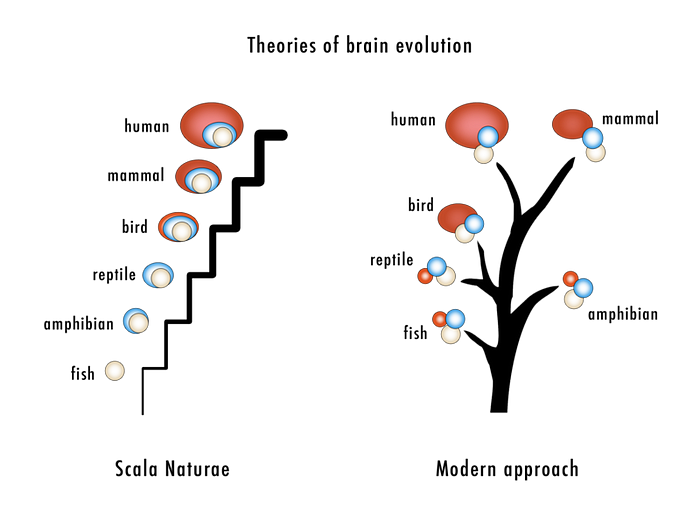

In terms of biological evolution, the idea of an “evolutionary tree of intelligence” based on increasing neural complexity is a fascinating concept, though it’s important to note that complexity doesn’t always correlate directly with what we might consider “intelligence.” Here’s a simplified overview of how neural complexity has increased over evolutionary time, keeping in mind that this is a broad generalization and that evolution is not a strictly linear process.

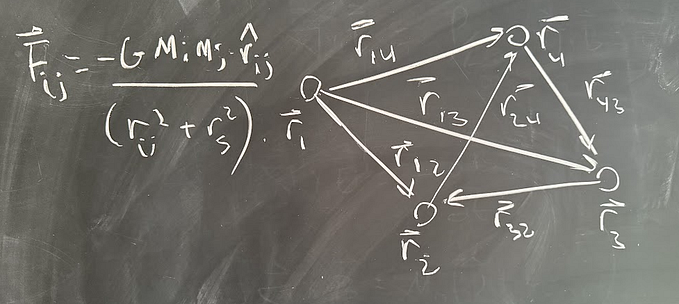

The concept of the “scala naturae,” also known as the Great Chain of Being, is an ancient philosophical idea that predates modern evolutionary theory. It’s not specifically a theory of brain evolution, but rather a hierarchical structure of all matter and life (Figure 3). This theory doesn’t align with modern evolutionary biology, which shows that evolution is not a linear process of progression towards greater complexity or ‘higher’ forms of life, but rather a branching tree with diverse adaptations.

The earliest nervous systems appeared in simple invertebrates like jellyfish and sponges. These organisms have very basic neural structures for basic sensory processing and motor control.

More complex invertebrates like insects and cephalopods (e.g., octopuses) evolved central nervous systems. Octopuses, for example, have relatively complex brains and exhibit behaviors indicative of learning and problem-solving.

Early vertebrates developed centralized nervous systems, including brains and spinal cords. Fish, for example, have brains but they are relatively simple compared to those of mammals.

Amphibians and Reptiles show an increase in brain complexity. Reptilian brains, for instance, manage more complex behaviors and physiological regulations than those of fish.

Birds and Mammals evolved from different branches of the reptilian lineage and exhibit significant increases in brain complexity. Birds, for example, have relatively large brains for their body size and are capable of complex behaviors.

Within mammals, there’s a wide range of brain complexities. Generally, primates (and especially humans) have the most complex brains. This complexity is not just about size but also the structure and density of neural connections, particularly in the neocortex, which is associated with higher-order functions like reasoning, planning, and language.

This progression does not imply that intelligence in animals is a straightforward scale from low to high. Instead, different animals have evolved different types of intelligence and cognitive abilities suited to their environments and lifestyles. For instance, the intelligence of a crow or a dolphin is different but not necessarily inferior to that of a primate. Evolution favors adaptations that are useful for survival and reproduction, not complexity for its own sake.

Artifical Intelligence

In the realm of AI, the progression of intelligence (Figure 4) can be seen in the development of increasingly sophisticated algorithms and systems:

- Early AI (1950s — 1970s): The focus was on rule-based systems and simple problem-solving.

- Machine Learning (1980s — 2000s): The development of algorithms that could learn from data marked a significant shift. This includes the evolution of neural networks, decision trees, and support vector machines.

- Deep Learning Era (2010s — Present): The advent of deep learning, with architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), brought remarkable advancements in processing complex data like images and natural language.

- Towards AGI: The current frontier involves efforts to move towards Artificial General Intelligence, though this remains a theoretical goal as of now.

In both biological and artificial contexts, the “tree” of intelligence is characterized by diverse, branching developments rather than a single, linear path. Each branch represents adaptations or technologies suited to specific needs and environments, whether it’s surviving in a jungle or navigating the vast complexities of human language.

Artificial General Intelligence (AGI)

Achieving Artificial General Intelligence (AGI) necessitates substantial technological advancements, enhancing AI systems to perform a broader and more adaptable range of functions (Figure 5). This development would involve creating algorithms capable of learning and generalizing across various tasks and domains, surpassing existing deep learning and reinforcement learning methods by integrating unsupervised, transfer, and meta-learning.

AGI requires a more profound understanding of language, with the ability to grasp context, subtlety, and nuance, and formulating coherent, context-appropriate responses. A sophisticated memory system is essential for AGI, enabling long-term information retention and utilization, akin to human long-term memory.

AGI’s learning and adaptation capabilities should mirror human efficiency, learning from minimal data or singular examples, and seamlessly transferring knowledge across different domains. Advanced perception abilities, integrating multiple sensory inputs, autonomous decision-making, planning, and execution in complex environments are crucial.

Additionally, AGI must exhibit genuine creativity and ethical reasoning aligned with human values. These advancements represent a fundamental shift from current AI, requiring breakthroughs in machine learning, reasoning, memory, and interaction.

What about Consciousness?

Consciousness is a complex and multidimensional concept that has been the subject of philosophical, psychological, and scientific inquiry for centuries. It generally refers to the state or quality of awareness, or, more specifically, the ability to experience feelings and thoughts, to be aware of oneself and one’s surroundings.

It has been argued that we can never understand consciousness because we can’t separate ourselves from that state. We can never achieve an objective perspective because we are immersed in it.

Philosopher Thomas Nagel famously articulated a related argument in his essay “What Is it Like to Be a Bat?”. Nagel argued that an organism’s subjective experience is inherently inaccessible to others; for instance, we can never objectively know what it is like for a bat to use echolocation.

An important point is that you can have intelligence without consciousness. Intelligence and consciousness are distinct concepts:

- Intelligence refers to the ability to learn, understand, and apply knowledge, solve problems, and adapt to new situations. In humans, this is often associated with cognitive processes like reasoning, memory, and learning.

- Consciousness, on the other hand, is about subjective experience and awareness. It involves being aware of oneself and one’s surroundings, having personal experiences, feelings, and thoughts.

Current AI systems (ANI) demonstrate a form of intelligence. They can process vast amounts of data, recognize patterns, solve specific problems, generate language, and even learn from new information to some extent. However, this is done without consciousness. AI systems do not have personal experiences or subjective awareness; they operate based on algorithms and data processing.

In biological beings, the relationship between intelligence and consciousness is complex and not fully understood. While higher intelligence in animals often correlates with what we interpret as signs of consciousness (such as in primates, dolphins, and elephants), the exact nature of this relationship is a topic of ongoing scientific and philosophical investigation.

Can you Create Consciousness?

In order to create consciousness you would first have to agree on a definition of what it is. There is no universally accepted definition of consciousness. It involves subjective experiences, awareness, and the ability to perceive one’s existence, all of which are difficult to quantify or replicate artificially.

In humans and other animals, consciousness is believed to arise from complex neural processes. Replicating these processes artificially, or understanding them well enough to create consciousness, is a challenge that has not yet been met.

Some theorists, most notably Roger Penrose and Stuart Hameroff, have proposed that quantum phenomena might play a role in consciousness. Their Orchestrated Objective Reduction (Orch-OR) theory suggests that quantum processes within the brain’s neurons could contribute to the generation of consciousness. This is highly speculative but attractive because it is easy to throw anything we don’t understand into the quantum bucket.

Current AI and robotics are far from achieving the kind of complex neural network architectures seen in biological brains. And even if we could, we need to start considering whether we should. The idea of creating consciousness brings up profound ethical and philosophical questions. If it were possible, it would raise significant concerns about the rights, treatment, and moral status of such conscious entities.

EDIT: 3rd January 2024

We recently came across an interesting paper, Consciousness in Artificial Intelligence: Insights from the Science of Consciousness, that directly addresses the issue of AI consciousness. In this paper, 19 researchers came up with a checklist to assess whether a system is conscious, and measured a variety of AI models against this. Their conclusion was:

Our analysis suggests that no current AI systems are conscious, but also suggests that there are no obvious technical barriers to building AI systems which satisfy these indicators.

On the other hand, a recent Tik Tok video showed a ChatGPT enabled robot recognise itself in the mirror…

If you enjoyed this article and would like to help support my writing, then please show your appreciation by following me, clapping (up to 50 times), highlighting or commenting! Alternatively, you can buy me a coffee or subscribe, to get an email whenever I publish a new article.